K-VQG: Knowledge-aware Visual Question Generation for Common-sense Acquisition

WACV 2023 (Accepted)

Kohei Uehara1, Tatsuya Harada1, 2

1The University of Tokyo, 2RIKEN

Abstract

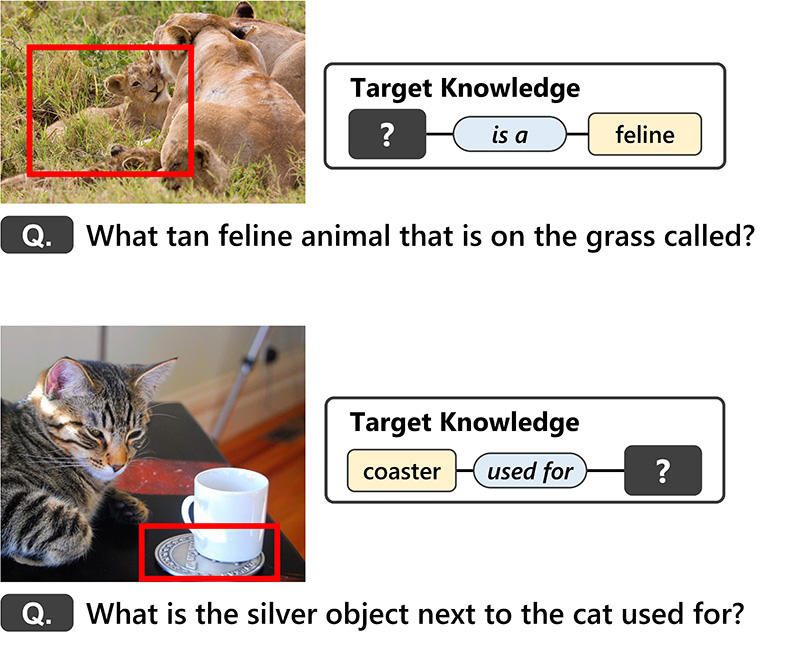

Visual Question Generation (VQG) is a task to generate questions from images. When humans ask questions about an image, their goal is often to acquire some new knowledge. However, existing studies on VQG have mainly addressed question generation from answers or question categories, overlooking the objectives of knowledge acquisition. To introduce a knowledge acquisition perspective into VQG, we constructed a novel knowledge-aware VQG dataset called K-VQG. This is the first large, humanly annotated dataset in which questions regarding images are tied to structured knowledge. We also developed a new VQG model that can encode and use knowledge as the target for a question. The experiment results show that our model outperforms existing models on the K-VQG dataset.

Dataset

The K-VQG dataset ( kvqg_dataset.zip ) can be downloaded from the link below.

After unzipping the downloaded file, you will get two files: kvqg_train.json and kvqg_val.json.

The training data contains 12,891 questions and the validation data contains 3,207 questions.

Image files can be downloaded from the Visual Genome website.

Note that the image id and object id are consistent with Visual Genome.

Here is an example of the data contained in the dataset.

We adapted the template for this website from IDR.