Kohei Uehara's Website

About me

I am Kohei Uehara (上原 康平), currently working as a research engineer at SB Intuitions Corp.I received my Ph.D. in Information Science and Technology from the University of Tokyo in March 2023.

My research interest focuses on machine learning across vision and language, Large Language Models (LLMs) and Vision-Language Models (VLMs), Accessibility, and Human-Computer Interaction (HCI).

Current Positions

- Research Engineer, SB Intuitions Corp.

Work Experience

- April 2023 - March 2025 : Assistant Professor, Machine Intelligence Lab., Research Center for Advanced Science and Technology (RCAST), The University of Tokyo

- June 2023 - March 2025 : Part-Time Researcher, Accessibility Lab., Miraikan (The National Museum of Emerging Science and Innovation)

- July 2023 - March 2025 : Visiting Researcher, Machine Intelligence for Medical Engineering Team, RIKEN

- April 2021 - July 2021: NVIDIA, Research Internship

- February 2019 - April 2019 : LINE Corporation, Machine Learning Engineer, Part time job

- August 2018 : Mercari, Inc. Machine Learning Engineer Internship

Education

- April 2020 - March 2023 : Ph. D. student, Information Science and Technology, The University of Tokyo. (Advisor: Prof. Tatsuya Harada)

- April 2018 - March 2020 : Master’s student, Information Science and Technology, The University of Tokyo. (Advisor: Prof. Tatsuya Harada)

- April 2014 - March 2018 : Undergraduate student, Mechano-Informatics, The University of Tokyo. (Advisor: Prof. Tatsuya Harada)

Projects

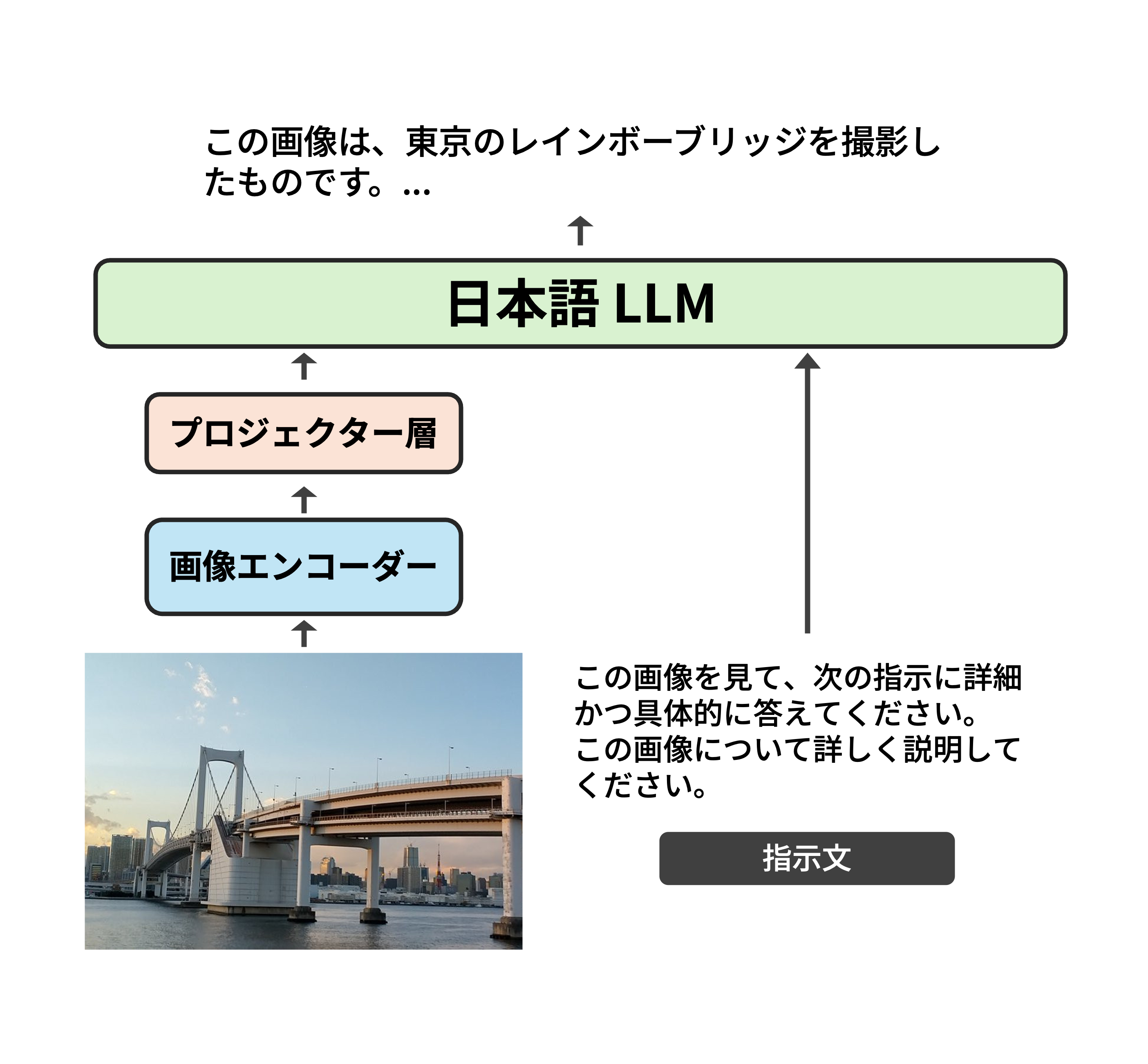

Asagi - Japanese Vision&Language Model

Asagi is a Japanese Vision&Language Model.

The architecture of Asagi is based on LLaVA, which consists of a vision encoder, a language decoder, and a 2-layer MLP for projecting visual features into the language feature space.

We used Japanese LLMs as the language decoder, and the vision encoder is based on the SigLIP model.

We synthesized a large-scale Japanese Vision & Language dataset, consisting of approximately 20 million image-text pairs.

The model is publicly available on the Hugging Face Model Hub.

Please check the project page for more details.

Publications

Journal and International Conference

- NEW Masaki Kuribayashi, Kohei Uehara, Allan Wang, Shigeo Morishima, Chieko Asakawa. WanderGuide: Indoor Map-less Robotic Guide for Exploration by Blind People. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI), 2025. [Paper]

- Kohtaro Tanaka, Kohei Uehara, Lin Gu, Yusuke Mukuta, Tatsuya Harada. Content-Specific Humorous Image Captioning Using Incongruity Resolution Chain-of-Thought. In Findings of the Association for Computational Linguistics (NAACL Findings), 2024.

- Kohei Uehara and Tatsuya Harada. Learning by Asking Questions for Knowledge-Based Novel Object Recognition. International Journal of Computer Vision (IJCV), 2024 [Paper] [Project Page]

- Kohei Uehara and Tatsuya Harada. K-VQG: Knowledge-aware Visual Question Generation for Common-sense Acquisition. WACV, 2023. [Paper] [Project Page]

- Kohei Uehara, Nan Duan, Tatsuya Harada. Learning to Ask Informative Sub-Questions for Visual Question Answering. 5th Multimodal Learning and Applications Workshop (CVPR 2022, Workshop), 2022. [Paper]

- Kohei Uehara†, Yusuke Mori† (†equal contribution), Yusuke Mukuta and Tatsuya Harada. ViNTER: Image Narrative Generation with Emotion-Arc-Aware Transformer. The 1st International Workshop on Multimodal Understanding for the Web and Social Media (WWW 2022, Workshop), 2022. [Paper]

- Kohei Uehara, Tatsuya Harada. Unsupervised Keyword Extraction for Full-sentence VQA. First International Workshop on Natural Language Processing Beyond Text with EMNLP 2020 (NLPBT2020), 2020. [Paper]

- Sho Maeoki, Kohei Uehara, Tatsuya Harada. Interactive Video Retrieval with Dialog. CVPR 2020 Workshop on Multimodal Learning, 2020. [Paper]

- Kohei Uehara, Antonio Tejero-de-Pablos, Yoshitaka Ushiku and Tatsuya Harada. Visual Question Generation for Class Acquisition of Unknown Objects. The 15th European Conference on Computer Vision (ECCV2018), 2018. [Paper]

Domestic Conference

- NEW 上原康平, 黒瀬優介, 安道健一郎, Chen Jiali, Gao Fan, 金澤爽太郎, 坂本拓彌, 竹田悠哉, Yang Boming, Zhao Xinjie, 村尾晃平, 吉田浩, 田村孝之, 合田憲人, 喜連川優, 原田達也. Asagi: 合成データセットを活用した大規模日本語VLM. 言語処理学会第31回年次大会, 2025. [Paper] [Project Page]

- 森 友亮†, 上原康平† († equal contribution), 原田達也. 視覚・言語融合 Transformer モデルによる画像からの物語文生成. CAI+CAI first workshop(言語処理学会第27回年次大会 ワークショップ), 2021. [Paper]

Others

- Masaki Kuribayashi, Kohei Uehara, Allan Wang, Daisuke Sato, Simon Chu, Shigeo Morishima. Memory-Maze: Scenario Driven Benchmark and Visual Language Navigation Model for Guiding Blind People. arXiv, 2024. [Paper]

- Kohei Uehara, Nabarun Goswami, Hanqin Wang, Toshiaki Baba, Kohtaro Tanaka, Tomohiro Hashimoto, Kai Wang, Rei Ito, Takagi Naoya, Ryo Umagami, Yingyi Wen, Tanachai Anakewat, Tatsuya Harada. Advancing Large Multi-modal Models with Explicit Chain-of-Reasoning and Visual Question Generation. arXiv, 2024. [Paper]

Competitions

- The 5th place in the Visual Question Answering (VQA) Challenge 2018 in CVPR2018. Mikihiro Tanaka, Atsuhiro Noguchi, Kohei Uehara, Lisa Kawai, Yoshitaka Ushiku, Tatsuya Harada [competition page]

Lectures

- Intelligent Informatics - Graduate School of Information Science and Technology, The University of Tokyo, June 6, 2024

Invited Talks

- Kohei Uehara, Antonio Tejero-de-Pablos, Yoshitaka Ushiku and Tatsuya Harada. Visual Question Generation for Class Acquisition of Unknown Objects. FIT, 2019. [Program]

- Kohei Uehara, Antonio Tejero-de-Pablos, Yoshitaka Ushiku and Tatsuya Harada. Visual Question Generation for Class Acquisition of Unknown Objects. MIRU, 2019. [Program]

- Kohei Uehara, Antonio Tejero-de-Pablos, Yoshitaka Ushiku and Tatsuya Harada. Visual Question Generation for Class Acquisition of Unknown Objects. PRMU, 2019. [Program]

Grants & Fellowships

- January 2021 - December 2021 : Microsoft Research Asia Collaborative Research for Ph.D. Student 2021 (D-CORE 2021)

- April 2020 - March 2023 : Japan Society for the Promotion of Science (JSPS) Research Fellowship for Young Scientists (DC1)

Professional Activities

- Reviewer : ICCV, CVPR, ECCV, WACV, AAAI, NeurIPS, etc.

Links

Google Scholar Citations

Last update: April 5, 2025